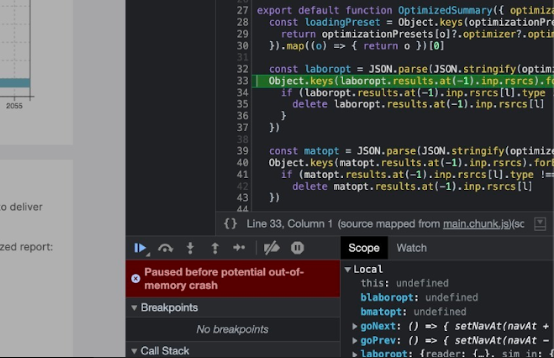

Case_Memory Crash

The problem:

const laboropt = JSON.parse(JSON.stringify(optimizer))

Object.keys(laboropt.results.at(-1).inp.rsrcs).forEach((l) => {

if (laboropt.results.at(-1).inp.rsrcs[l].type !== 'Labor') {

delete laboropt.results.at(-1).inp.rsrcs[l]

const matopt = JSON.parse(JSON.stringify(optimizer))

Object.keys(matopt.results.at(-1).inp.rsrcs).forEach((l) => {

if (matopt.results.at(-1).inp.rsrcs[l].type !== 'Material')

delete matopt.results.at(-1).inp.rsrcs[l]

})

const blaboropt = JSON.parse(JSON.stringify(optimizer))

Object.keys(blaboropt.results.at(0).inp.rsrcs).forEach((l) => {

if (blaboropt.results.at(0).inp.rsrcs[l].type !== 'Labor') {

delete blaboropt.results.at(0).inp.rsrcs[l]

const bmatopt = JSON.parse(JSON.stringify(optimizer))

Object.keys(bmatopt.results.at(0).inp.rsrcs).forEach((l) => {

if (bmatopt.results.at(0).inp.rsrcs[l].type !== 'Material')

delete bmatopt.results.at(0).inp.rsrcs[l]

})When the above code executes JSON.stringify(optimizer), it serializes the optimizer object into a JSON string. This operation can consume a significant amount of memory, especially if the optimizer object is large. The serialized JSON string is then stored in memory.

Following that, JSON.parse(JSON.stringify(optimizer))is called, which parses the JSON string back into a JavaScript object. This operation requires additional memory to recreate the object from the string representation.

The code repeats this process multiple times with optimizer and creates new copies of the object, leading to excessive memory usage.

When you use JSON Parse on huge data in Chrome, it’s like trying to put together this massive puzzle all at once. Chrome needs to take each piece of information from the data and make sense of it, just like you would with the puzzle pieces.

When Chrome runs out of memory while trying to process the huge data with JSON Parse, it’s like not having enough space to spread out all the puzzle pieces. Just like when you run out of table space to work on your puzzle, things can get messy and chaotic.

When Chrome crashes due to a memory issue, it means it couldn’t handle all the information at once, and it needs to stop and clean up the mess. This can be frustrating because it can make your web page freeze or stop working altogether.

To mitigate this problem we have to find another way of sorting and filtering the data at one go without the repetitive parsing of optimizer.

The Fix:

const resourcesData =

{

labor: {

base: [],

new: []

},

material: {

base: [],

new: []

},

labor_resource_distribution: {

base: [],

new: []

},

material_resource_distribution: {

base: [],

new: []

}

}An empty object is created to store the desired filtered data. This approach offers a cleaner, more organized, and easily understandable solution.

if (newResources) {

newResources.forEach((l, i) => {

if (l.type.toLowerCase() === 'labor') {

resourcesData.labor.new.push({

name: l.name,

distribution: l.distribution,

id: l.id,

type: l.type,

})

if (l.distribution) {

Object.entries(l.distribution.xy).map(([key, value]) =>

resourcesData.labor_resource_distribution.new.push(

{

x: key,

y: value.data

}

))

}

}

if (l.type.toLowerCase() === 'material') {

resourcesData.material.new.push({

name: l.name,

distribution: l.distribution,

id: l.id,

type: l.type,

})

if (l.distribution) {

Object.entries(l.distribution.xy).map(([key, value]) =>

resourcesData.material_resource_distribution.new.push(

{

x: key,

y: value.data

}

))

}

}

})

}The refreshed code eliminates redundancy by consolidating the common operations into shared blocks, reducing duplication and making the code more concise. With clearer variable names, they provide better context and understanding of the purpose and content of the data being processed.

Overall, the rewritten code demonstrates better code organization, clarity, and efficiency, making it easier to read, understand, and maintain.

And most importantly, it solved the memory issue.